XAI: Building Trust and Transparency in Machine Learning Models

As AI becomes more pervasive in our lives, it’s becoming increasingly important to understand how these systems work and to be able to trust their decisions. Explainable AI (XAI) is a growing field that aims to create more transparent and interpretable machine learning models. In this article, we’ll explore what XAI is, why it’s important, and how techniques such as decision trees and rule-based systems can be used to build more transparent and trustworthy AI systems.

Introduction

Artificial intelligence (AI) is increasingly being used in a wide range of applications, from chatbots to autonomous vehicles. However, as AI becomes more ubiquitous, concerns have arisen about its transparency and accountability. In particular, there is a growing demand for AI systems that are explainable. Meaning that they can be understood and interpreted by humans. This article will explore the concept of explainable AI, including why it’s important for building trust in AI systems. And how techniques such as decision trees and rule-based systems can be used to create more transparent and interpretable models.

What is Explainable AI?

Explainable AI (XAI) is a subfield of AI that focuses on developing methods and techniques to make AI systems more transparent and interpretable. The goal of XAI is to enable humans to understand the reasoning behind AI decisions and action. Which is critical for building trust in these systems. XAI is especially important in high-stakes applications. Such as healthcare and finance, where decisions made by AI systems can have significant consequences.

Why is Explainable AI Important?

The lack of transparency and interpretability in AI systems is a major obstacle to their widespread adoption. If people cannot understand how an AI system is making decisions or why it is taking a particular action, they are unlikely to trust or rely on that system. This is particularly true in applications where human lives or livelihoods are at stake.

Another reason why XAI is important is that it can help identify and mitigate biases in AI systems. Many machine learning models are trained on biased data, which can lead to discriminatory outcomes. By making AI systems more transparent and interpretable, it becomes easier to identify and correct biases in these models.

How can we achieve Explainable AI?

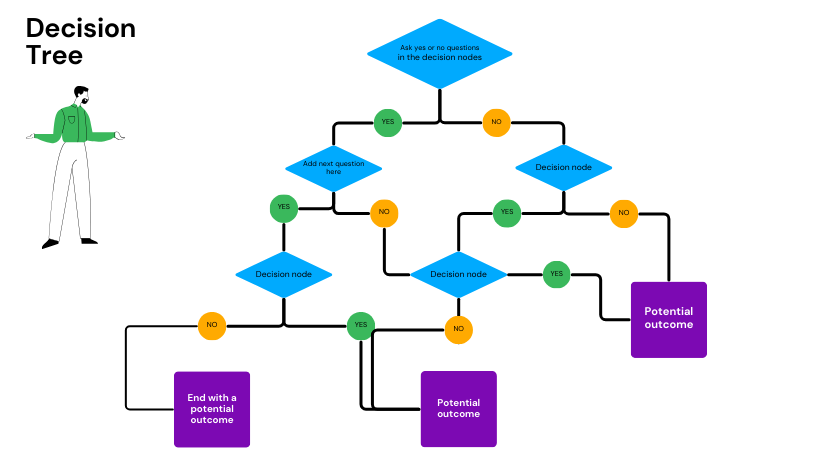

Several techniques and approaches can be used to achieve XAI. One common approach is to use decision trees. Which are a type of model that creates a tree-like structure of decisions and their possible consequences. Decision trees are easy to understand and can be used to trace the reasoning behind AI decisions.

Exemple of decision tree

Another approach is to use rule-based systems, which are sets of rules that define the conditions under which certain actions should be taken. Rule-based systems are often used in expert systems. Which are AI systems that simulate the decision-making capabilities of a human expert in a particular domain.

A third approach is to use model-agnostic techniques, such as LIME (Local Interpretable Model-Agnostic Explanations) or SHAP (Shapley Additive exPlanations). These techniques can be used with any type of machine learning model and provide local explanations for individual predictions.

Finally

Explainable AI is critical for building trust and transparency in AI systems. By making AI systems more transparent and interpretable, we can identify and correct biases, build trust with users, and ensure that AI is used ethically and responsibly. There are many techniques and approaches to achieving XAI, and organizations should carefully consider their options when implementing AI systems.

NLP 101: A Beginner’s Guide to Natural Language Processing

AI vs. Human Intelligence